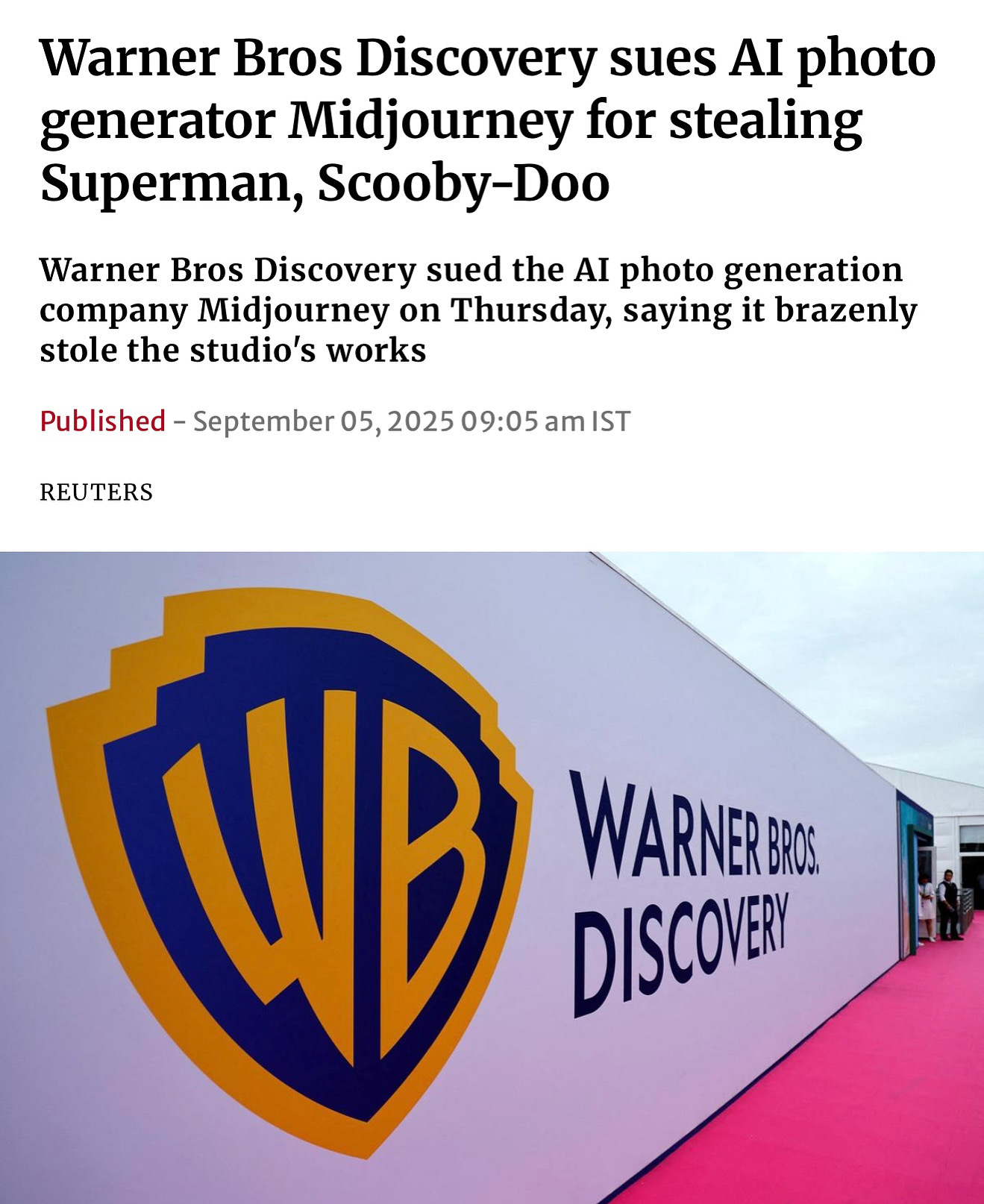

September 8, 2025

Warner Bros. and Midjourney: What the Lawsuit Means for AI’s Future

The battle over AI-generated content just escalated.Warner Bros. Discovery has filed a lawsuit against Midjourney, claiming the AI company generated images of Superman, Scooby-Doo, and other iconic characters without permission.On the surface, it might look like a fight over superheroes. But the implications go far beyond individual characters. This case could set a precedent that defines how intellectual property — from fictional characters to real human faces — is treated in the age of AI.

You might also like

Read