In a world increasingly powered by AI, one of the most persistent challenges has been creating authentic images of real people. If you've ever tried to generate an image of a celebrity or public figure using platforms like DALL-E or Midjourney, you've likely encountered error messages, refusals, or at best, vague approximations that miss the mark.

But why is this the case? And how is Official AI addressing this fundamental challenge?

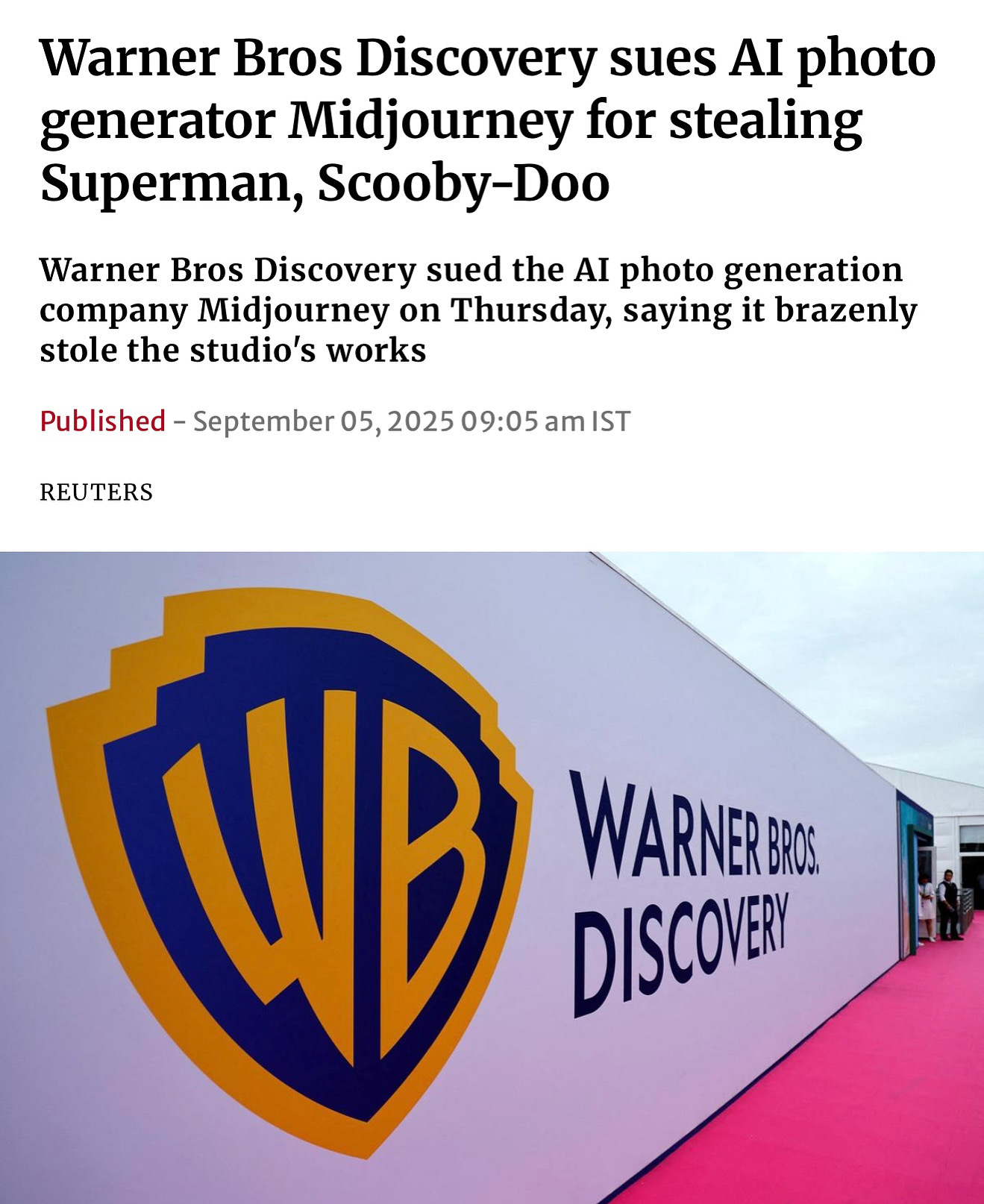

The Rights Roadblock: Why AI Platforms Block Real People

The simple answer is legal protection. Most mainstream AI image generators explicitly prohibit creating images of real people because doing so risks violating several distinct but related legal protections:

Right of Publicity vs. NIL vs. Copyright: Understanding the Differences

These three legal concepts often get confused, but they protect different things:

Right of Publicity is a person's right to control the commercial use of their identity—including their name, image, likeness, and sometimes voice. This right varies by state but generally prevents companies from using someone's identity for commercial gain without permission. It's what prevents a brand from using your face on a billboard without your consent.

NIL (Name, Image, and Likeness) is a term that became prominent with NCAA rule changes allowing student-athletes to monetize their personal brand. NIL is essentially shorthand for the elements protected under the Right of Publicity.

Copyright protects original creative works, including photographs. When AI models are trained on photos, they may inadvertently replicate elements of copyrighted images, potentially leading to infringement claims. This is a separate issue from using someone's likeness, though they often overlap.

The Industry Response: Block Everything

Given these legal risks, most AI image generators have taken a conservative approach: block all requests involving real people. This explains why:

- DALL-E will refuse to generate images of individuals by name

- Midjourney might create something that vaguely resembles a person, but lacks their true likeness

- Other platforms may generate a similar-looking but legally distinct character

While this approach protects the AI companies, it creates a significant barrier for legitimate uses. Brands that want to work with talent, celebrities who wish to scale their presence, and content creators looking to streamline production are all left without options.

The Official AI Solution: Vaults with Built-in Consent

This is where Official AI offers a different approach. Rather than simply blocking all content involving real people, we've created a system that ensures proper consent, credit, and compensation—the three pillars of ethical AI content creation.

Our vault technology works through a simple but powerful concept:

- Controlled Training Data: The talent provides authorized images and media to create a secure IP vault

- Consent Mechanism: The talent explicitly grants permission for specific uses

- Licensing Framework: Clear terms establish how the content can be used and ensure proper compensation

- Authenticated Output: Generated content includes provenance data showing it was created with permission

What makes this revolutionary is that it transforms what would otherwise be a legal liability into a powerful tool for both talent and brands. Celebrities, athletes, and other public figures can now safely participate in the AI revolution on their own terms, while brands gain access to authentic, licensable content featuring real people.

Real-World Impact: From Months to Minutes

The practical implications of this technology are enormous. Consider the traditional process for creating content with talent:

- Scheduling conflicts that can delay productions for weeks or months

- Travel and logistics costs that can quickly escalate into six figures

- Limited output from expensive shoot days

- Rigid content that can't be easily modified once created

With Official AI's vault technology, brands can:

- Create authentic content in minutes rather than months

- Generate unlimited variations for different channels and markets

- Test concepts before full-scale production

- Update content seasonally without new shoots

Meanwhile, talent benefits from:

- New revenue streams without additional time commitments

- Greater control over how their likeness is used

- Protection against unauthorized AI-generated content

- Expanded partnership opportunities

Seeing is Believing

The proof is in the output. As you can see from the comparison above, when asked to create an image of Humphrey Bogart in Times Square:

- DALL-E refused to create the image, citing copyright restrictions

- Midjourney generated a vague approximation that lacks authentic detail

- Official AI produced an authentic, licensed image that truly captures Bogart's likeness

This difference isn't just visual—it's legal. The Official AI image is created with proper consent and licensing, making it safe for commercial use.

The Future of Authentic AI Content

As AI continues to transform content creation, the distinction between unauthorized replications and properly licensed content will become increasingly important. Brands will need to ensure they're using systems that respect rights and provide proper compensation to talent.

Official AI is leading this transformation by:

- Empowering talent to safely participate in the AI revolution

- Giving brands access to authentic content that was previously impossible

- Creating a transparent ecosystem where consent and compensation are built in

- Establishing new standards for ethical AI-generated media

Starting Your Authentic AI Journey

Whether you're a brand looking to create more efficient content featuring real people, or talent wanting to safely monetize your likeness in the AI era, Official AI provides the secure foundation you need.

The future of authentic AI content isn't about working around legal restrictions—it's about working with them to create an ecosystem that benefits everyone. By combining cutting-edge AI with proper consent mechanisms, we're turning what was once a limitation into a competitive advantage.

And that future is already here.