The Future of Celebrity AI: Beyond Takedowns to Authentic Creation

Today's CNN article highlights a growing crisis facing celebrities in the age of AI: the proliferation of unauthorized deepfakes that misuse their likeness. The piece details how stars like Steve Harvey are increasingly targeted by AI-generated scams and fake content, with Harvey noting that these unauthorized uses of his image and voice are at "an all-time high."

The Two-Part Challenge of Celebrity AI

The CNN article brings needed attention to the reactive side of the AI challenge — dealing with unauthorized content after it's been created. Companies like Loti or Vermillio, highlighted in the piece, offer services to help celebrities detect and remove these unauthorized uses.

This reactive approach is necessary in today's environment but I've learned after years in this space that we need more than just detection and takedowns. We need to build an ecosystem that enables authorized, authentic AI content creation from the start.

Building the Path Forward: Prevention AND Protection

At Official AI, we believe the most effective approach combines both prevention through proper licensing AND protection through digital provenance.

Prevention starts with giving celebrities and their representatives proper control over how their likeness can be used in AI-generated content. By creating secure vaults of authorized training data, setting clear usage parameters, and ensuring proper compensation, we create a legitimate pathway for brands and content creators to work with talent.

Protection is equally important. Every piece of AI-generated content should have clear provenance — a verifiable record of who created it, with what permissions, and for what purpose. This makes unauthorized content immediately distinguishable from licensed material. At Official AI, we believe in open standards for provenance, which is why we're proud members of the Content Authenticity Initiative (CAI) using C2PA technology rather than relying on proprietary blockchain solutions.

Why Takedowns and Proprietary Systems Alone Aren't Enough

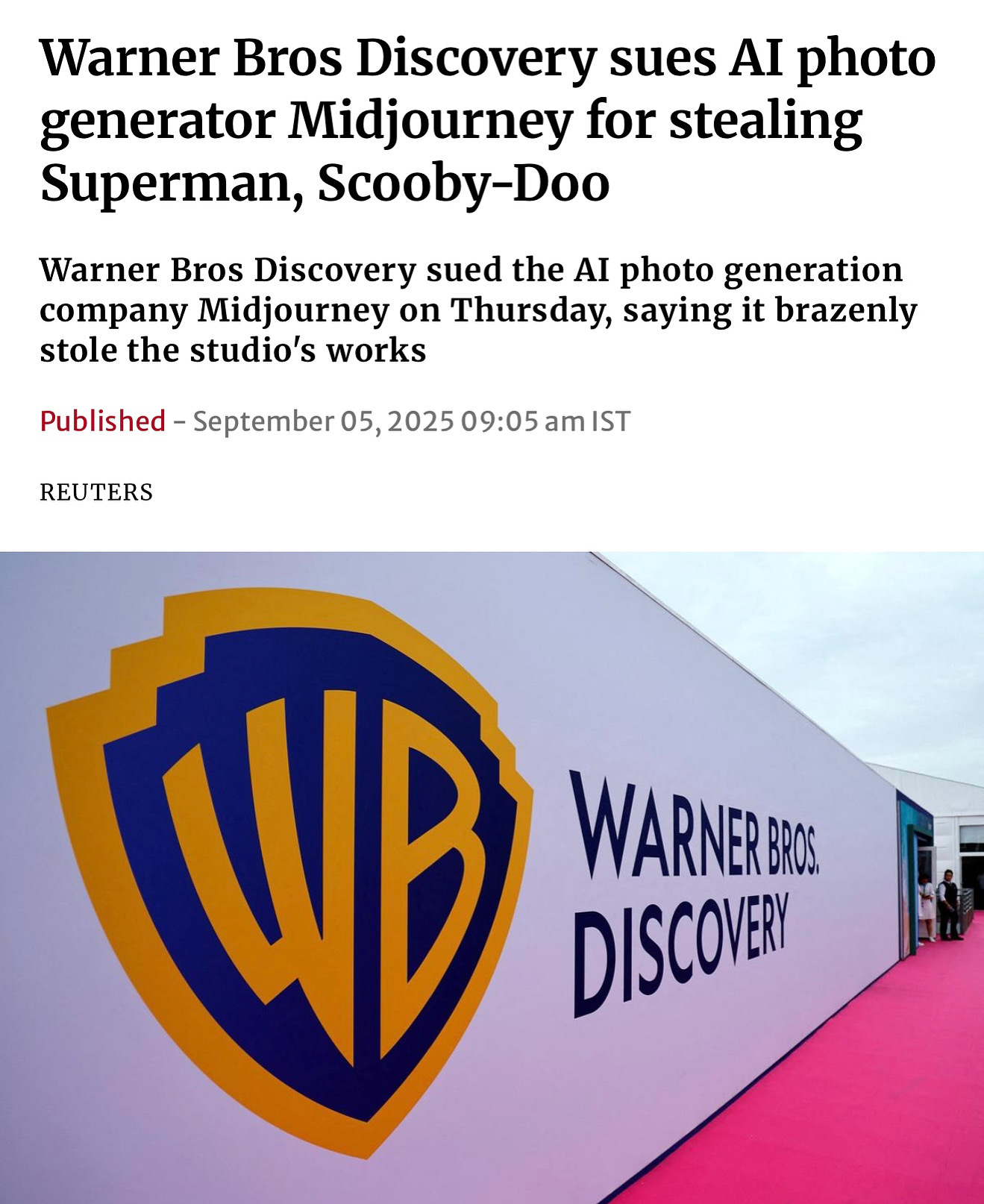

While services focused on finding and removing unauthorized content serve a vital role in today's environment, they represent only half of the solution. Similarly, closed, proprietary systems for tracking content—such as blockchain-based approaches mentioned in the article—create additional barriers rather than comprehensive solutions. Here's why a more open approach is needed:

- Scale challenges: With millions of new pieces of AI content created daily, detection and takedown will always be a step behind. I've seen this movie before with music licensing—reactionary approaches just can't keep up with the volume.

- Economic opportunity: Talent should be able to participate in and benefit from the AI revolution, not just defend against its misuses. At Official AI, we're creating those pathways.

- Creative potential: AI offers unprecedented opportunities for creators and talent to collaborate in ways that weren't previously possible. I've always believed technology should enhance creativity, not restrict it.

- Interoperability challenges: Proprietary, closed systems for tracking content (like blockchain solutions) don't integrate well with existing media ecosystems and create unnecessary technical barriers.

- Industry adoption: Open standards supported by major platforms and technology companies—like the C2PA standard we use at Official AI—have much greater potential for wide industry adoption than proprietary alternatives.

The No Fakes Act and similar legislation discussed in the CNN article are important steps forward. But alongside regulatory frameworks that penalize bad actors, we need to build systems that enable good actors to create legitimate, authorized content.

A Vision for Authentic AI

With Official AI, we're ensuring that AI-generated content featuring real people is:

- Created with consent from the start

- Clearly labeled with its provenance

- Properly compensated through fair licensing agreements

- Creatively empowering for both talent and brands

This vision requires moving beyond the current "Whack-a-Mole" approach described in the CNN article toward a comprehensive system that includes both protection against unauthorized uses and pathways for authorized creation.

Joining Forces for a Better Solution

The challenges highlighted in the CNN article are real and urgent. Services that help celebrities detect and remove unauthorized content serve an important purpose in today's environment.

But to truly solve this problem, we need to build an ecosystem that makes unauthorized content the exception rather than the rule. That means creating straightforward, ethical ways for brands and creators to work with talent through AI.

By combining prevention through proper licensing with protection through digital provenance, we can transform AI from a threat to talent into an opportunity. That's the future we're building at Official AI — one where Steve Harvey and others can confidently participate in the AI revolution rather than simply defending against it.