There’s nothing like a Presidential election to put national issues at the forefront, especially if the issue directly relates to the integrity of the election itself. One of the most poignant of these is the growing concern over the use of deepfakes and other AI-generated misinformation. Are the sizes of rally crowds being faked? Did the candidates actually say what they say in a video? These concerns are tied to the potential impact of deepfakes on voter perception and misinformation. As deepfakes become more sophisticated, there is an increasing push from lawmakers to regulate their use, especially in political contexts. The federal government and several states have introduced or passed legislation aimed at curbing the use of deepfakes in election-related content. For instance, the proposed "DEEPFAKES Accountability Act" seeks to impose criminal penalties on those who create or distribute deepfakes intended to deceive voters or harm political candidates.

But the issue of deepfakes goes so much deeper. With so much media being completely or partially produced using artificial intelligence, protecting the name and likeness rights of talent has never been more crucial. The rise and ubiquity of generative AI technologies, including deepfakes and synthetic media, has brought with it new challenges and opportunities for the entertainment industry. Understanding these technologies is essential not just for safeguarding personal identity but also for fostering positive and sustainable working relationships between content creators, talent, and their representatives. The ability to distinguish between harmful deepfakes and benign synthetic media can help talent maintain control over their image and ensure that innovative AI tools are used ethically and collaboratively. This understanding is key to navigating the evolving landscape of digital content creation and upholding the integrity of creative industries.

Deepfakes vs. Synthetic Media

The terms deepfake and synthetic media are not mutually exclusive. A deepfake is essentially a highly realistic piece of synthetic media, often created with the intention to deceive. Deepfakes usually involve unauthorized use of someone’s image or voice, making them look or sound like they’re doing or saying something they never did. This malicious intent is what gives deepfakes their notorious reputation.

On the flip side, synthetic media covers a broader range of AI-generated content. It’s not always about trickery. Think of synthetic media as the broad umbrella term for any media created by artificial intelligence. This can include everything from deepfakes to more benign and creative applications like AI-generated art or personalized video greetings. While deepfakes are often linked with deception, synthetic media can be used positively, provided there's consent and credit given to those whose likeness or content is being used.

Challenges Regulation and Enforcement

Now, let’s talk about regulation. Who’s in charge of policing this stuff? It's a tricky question. Enforcement against deepfakes is challenging. Technologies like TrueMedia are doing valuable work in identifying deepfakes, especially in the political arena. However, they don’t have the authority to enforce anything. The real power lies in regulatory frameworks. Right now, the most robust tool we have is the patchwork of right of publicity laws across different states. These laws allow individuals to control the commercial use of their identity. However, there's a push for more cohesive federal regulations.

For effective enforcement, a comprehensive approach is essential. This involves not just detecting deepfakes but creating an ecosystem for authentic media. Standards like C2PA (Coalition for Content Provenance and Authenticity), initiated by organizations like BBC, Adobe, and Microsoft, play a crucial role. They help track the origin and modifications of media content, ensuring authenticity and helping to distinguish between legitimate and deceptive uses.

Despite the digital footprint left by online activities, tracking down and enforcing penalties against malicious actors is no walk in the park. Once a deepfake is out there, the damage is often immediate and significant. The problem isn’t just catching the perpetrator but dealing with the fallout quickly enough to mitigate the harm. This is similar to real-life scenarios where a crime is committed, but by the time enforcement catches up, the impact has already been felt.

Navigating the world of deepfakes and synthetic media requires a nuanced understanding and a robust framework for regulation and enforcement. While deepfakes carry a darker, more deceptive connotation, synthetic media can be a powerful tool for positive innovation when used ethically. As the technology continues to evolve, so must our approaches to education, regulation, and enforcement to ensure that this digital revolution benefits everyone.

Open vs. Closed Technology

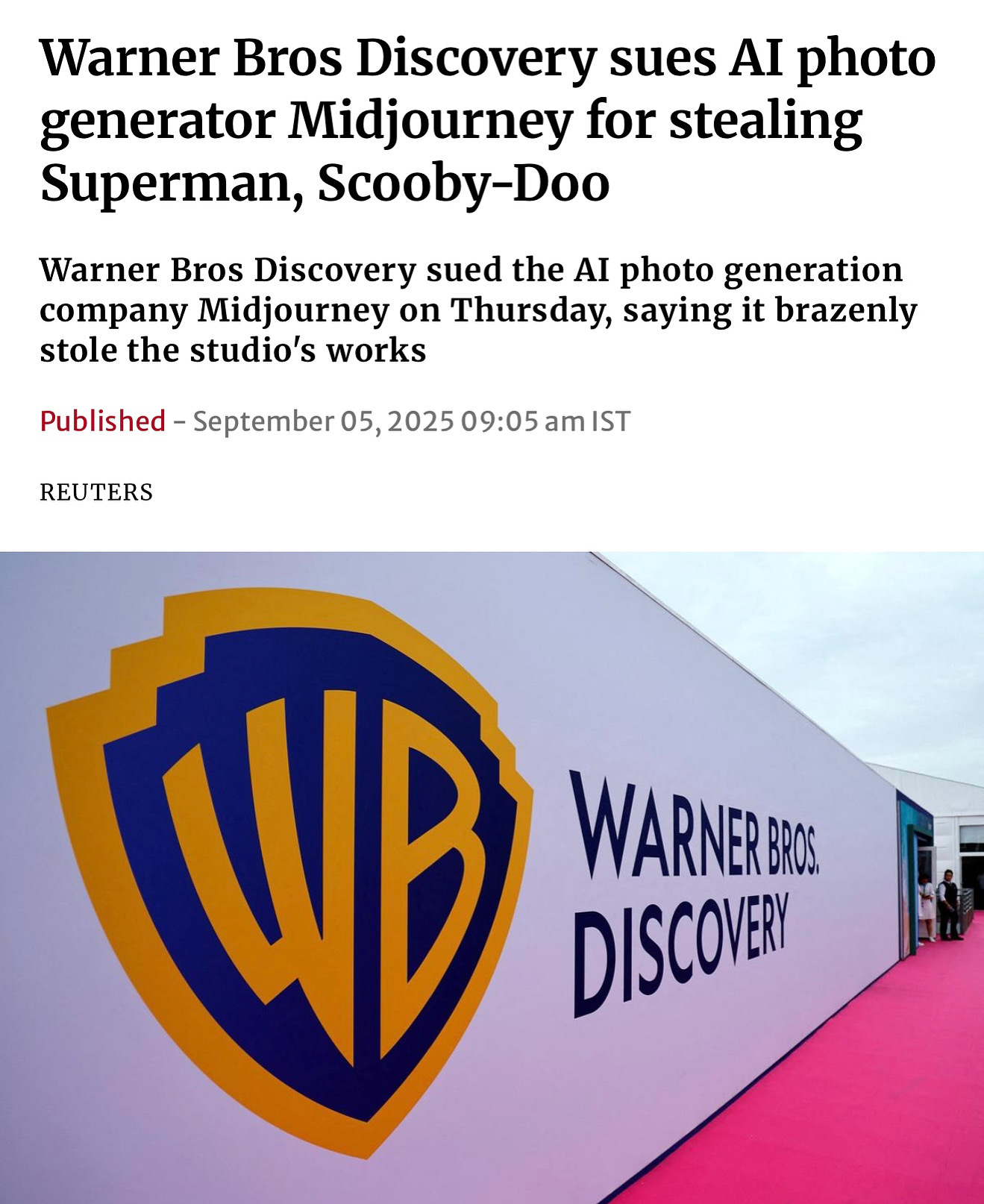

Another hot topic is the debate between open and closed AI technologies. Should AI models be open-source or closed? Each approach has its pros and cons. Open-source models, like those supported by platforms like Hugging Face, offer transparency and community collaboration. They can be used for a wide range of purposes, depending on the user's intent.

Closed models, on the other hand, are developed by companies like OpenAI and Midjourney. They often don’t disclose the inner workings or the training data used. While this can protect intellectual property, it also raises concerns about accountability and transparency. Whether a model is open or closed, the potential for misuse remains, and it ultimately depends on how humans choose to employ these tools. It’s not about the technology itself being good or bad but about how it’s used.

Educating Talent

When it comes to talent, there’s a significant knowledge gap. Many people in the creative industry might know when they're being exploited but lack the detailed understanding of AI, synthetic media, and their rights. There’s a pressing need to educate them about the nuances of these technologies. They need to understand that synthetic media can be a force for good, creating opportunities that otherwise would not exist. It’s about ensuring they’re aware of risks and the opportunities alike, and that they know how to navigate this new landscape.

A Bill of Rights for AI Users

Given the complexities of the AI media landscape, there’s a growing call for a Bill of Rights for AI users. This would outline the fundamental rights related to consent, credit, and compensation in the realm of synthetic media. Organizations like the Privacy Foundation have laid out some principles, but there’s a need for enforceable rights. Without the teeth to back them up, these rights are more like suggestions than actual protections. It’s crucial to establish clear guidelines and mechanisms to ensure that individuals are protected from misuse and can benefit from the positive aspects of AI-generated media. All stakeholders need to feel that they are operating within an ecosystem of authentication.