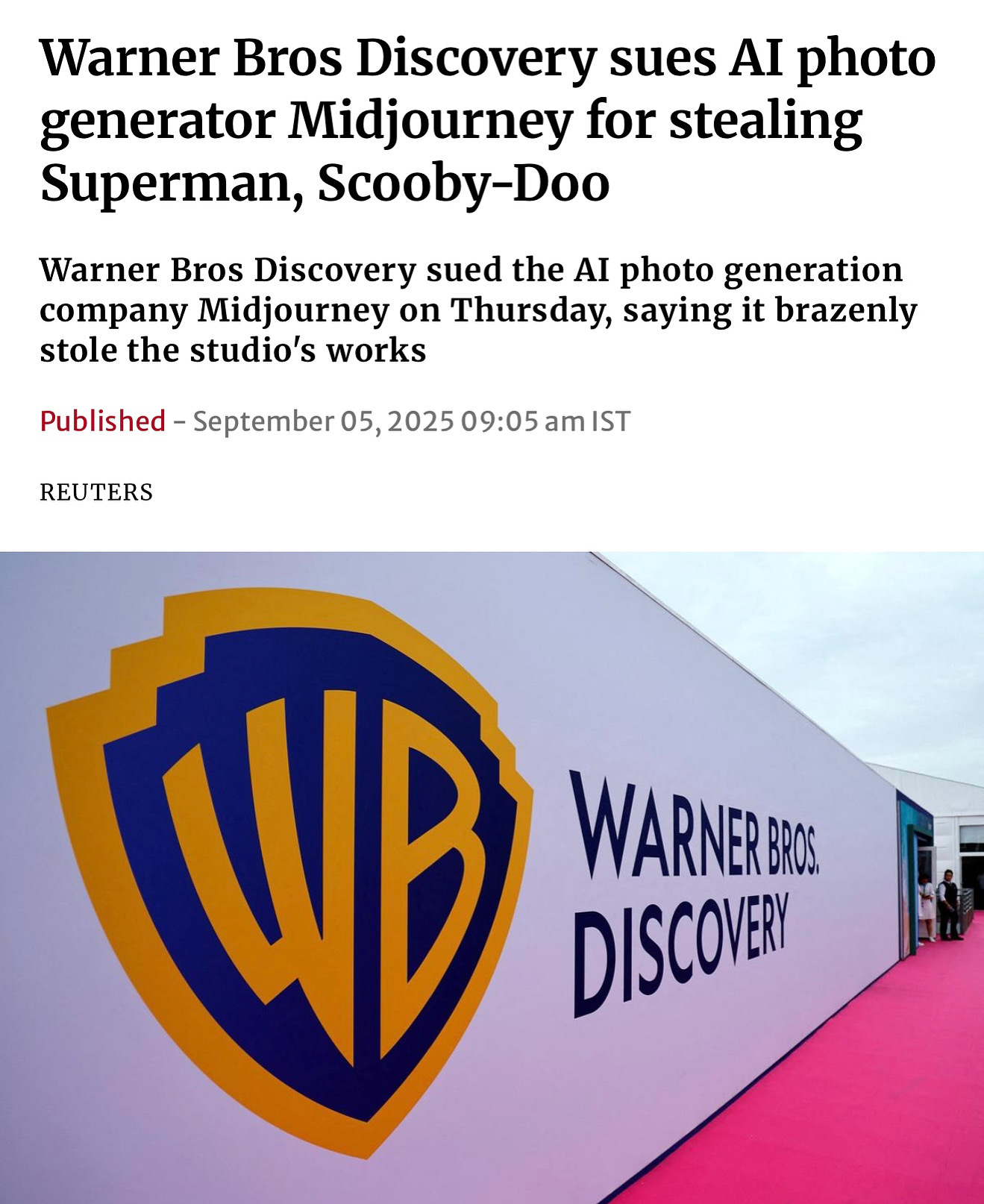

The YouTube-CAA partnership announced today represents an important step forward in establishing guardrails for AI-generated content. It's particularly encouraging to see CAA embrace the fundamental principles of consent, credit, and compensation in managing AI-generated content - principles that have been core to Official AI's mission since our founding.

Their focus on these three essential elements reflects a growing industry consensus around what's needed to build trust in AI-powered content creation.

Having worked extensively with content identification technology since the early days of Content ID, I see both promising developments and potential challenges ahead. YouTube's proactive approach to building detection systems and giving talent direct control over takedowns shows the industry is moving in the right direction. This echoes my experience at Audiosocket and LIDCORE, where we saw that empowering rights holders with tools for managing their IP was crucial for building trust in new technology platforms.

However, we need to be honest about the limitations of takedown-based systems. The reactive nature puts the burden on talent to constantly monitor and flag unauthorized content - something we learned was unsustainable during the early days of Content ID. It's like trying to plug holes in a dam that keeps springing new leaks. At some point, you need to build a better dam.

At Official AI, we believe the future lies in proactive authentication and controlled creation rather than retroactive enforcement. We've built our entire platform around ensuring talent maintains control through consent, receives proper credit for their contributions, and participates fairly in the compensation generated from their digital likeness. It's validating to see major industry players converge around this framework, but implementation is where the real work begins.

What's particularly encouraging about this announcement is seeing major players like YouTube and CAA acknowledge that addressing AI likeness rights requires both technological solutions and industry collaboration. The challenge now moves from alignment on principles to effective implementation. While takedown systems are an important step, they're just that - a step. The real solution needs to be built into the foundation of how AI content is created and authenticated.

The key will be ensuring these tools evolve beyond just takedown capabilities to enable new creative and commercial opportunities while maintaining proper consent and compensation frameworks. At Official AI, we're focused on building that future - where authenticity is built in from the start, not enforced after the fact.

This is a pivotal moment for the industry. The problems we're facing with unauthorized AI content won't be solved by takedown systems alone, but it's heartening to see more players acknowledging the core principles needed to build trust in this new era. We look forward to working alongside industry partners who share our commitment to building an ethical AI content ecosystem that works for everyone.